DeepSeek-V3.2 and DeepSeek-V3.2-Speciale: Next-Gen Open-Source AI

Do you ever get frustrated by AI tools that break when you give them very long documents, complex code, or multi-step tasks? Maybe your existing model gets slow, misses context, or gives shallow answers when things get complicated. If you work with long reports, codebases, logical reasoning, or creative workflows, this can waste hours, create stress, and block productivity.

That’s exactly why DeepSeek-V3.2 and DeepSeek-V3.2-Speciale matter to you. They promise to solve those pain points and bring reliable AI, even for heavy, real-world workloads. As I’m following AI advancements closely, I can tell you: these models are built with long-context reasoning, agent-style tool use, and high-performance tasks in mind.

In short,if you need an AI that can think deeply, handle long inputs, do coding or logic problems, or support complex tool workflows, DeepSeek-V3.2 and its “Speciale” variant are among the most capable open-source options right now.

What Are DeepSeek-V3.2 and DeepSeek-V3.2-Speciale?

DeepSeek-V3.2 is the standard release in the V3.2 series of open-source models by DeepSeek. It represents a big jump from previous versions, combining balanced performance with efficiency and support for a wide range of tasks, from conversation to code, reasoning to tool-use.

DeepSeek-V3.2-Speciale, on the other hand, is the “power” version. It is optimized for heavy reasoning, complex problem-solving, and tasks requiring deep logic, math, or long chains of thought. It’s designed for advanced users and developers who need top-tier reasoning and long-context handling.

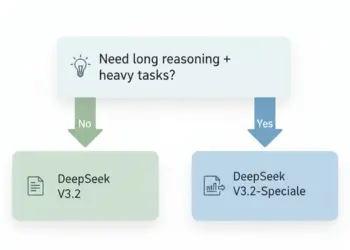

In short: V3.2 = everyday AI workhorse; V3.2-Speciale = heavy-duty reasoning engine.

What Makes Them Special?

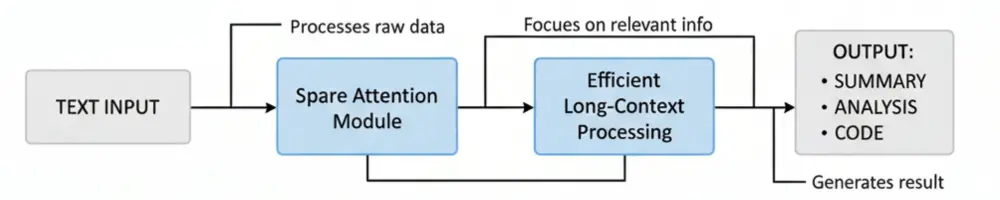

Sparse Attention for Long Context

One of the biggest breakthroughs of DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, is the introduction of DeepSeek Sparse Attention (DSA). Instead of the traditional “every token attends to every token” method (which becomes very slow and memory-heavy when you have long text), DSA uses a smarter approach: it picks a smaller set of relevant tokens per query, drastically reducing compute cost.

In practice, this means the model can handle very long documents, up to 128 K tokens of context, at a much lower cost and faster speed. For you, this translates to being able to feed huge reports, long conversations, codebases, or multi-file code projects to the AI, something earlier models would struggle with or choke on.

High-Quality Reasoning & Benchmark Performance

DeepSeek-V3.2 and especially V3.2-Speciale are not just about handling long text, they are built for reasoning, coding, math, logic, and structured problem-solving. According to the maker’s technical report, these models show performance comparable to leading closed-source models (like those from major AI labs), even on tough benchmarks.

In competitive tests (math Olympiad, programming competitions, coding benchmarks), V3.2-Speciale reportedly achieved “gold-medal level” performance. That means tasks previously thought to require top-tier closed models, like complex mathematics, algorithmic coding, multi-step reasoning, are now accessible via open-source AI.

Agent & Tool-Use Integration

Another big strength: V3.2 supports tool-use + reasoning, meaning the model doesn’t just generate text, but can reason while using external tools (like code interpreters, calculators, search agents, etc.). For real-world workflows (coding, research, data analysis), this makes a huge difference.

The developers built a massive synthetic dataset (1,800+ environments, 85,000+ complex instructions) during training to make the model robust across coding, search, tool-use, mathematical logic, and multi-step tasks.

Open Source and Accessibility

Unlike many high-power AI models that are closed-source and expensive, DeepSeek releases their models openly (including V3.2 and V3.2-Speciale), making them available on platforms like Hugging Face.

This openness allows developers, researchers, small teams, and even hobbyists to experiment, fine-tune, deploy, all without paying huge licensing fees. It democratizes access to top-tier AI capabilities.

Comparison of DeepSeek-V3.2 and DeepSeek-V3.2-Speciale

Here’s a quick comparison of the two variants to help you decide which one fits your needs:

| Model | Best For | Strengths | Trade-offs / Considerations |

| DeepSeek-V3.2 | Everyday tasks: chat, content generation, moderate reasoning, tool-use, coding/helper tasks, document handling | Balanced performance, efficient inference, tool-calls supported, lower cost | Slightly less powerful than Speciale on heavy reasoning; but more practical for general use |

| DeepSeek-V3.2-Speciale | Heavy-duty reasoning: math problems, algorithmic code, long analysis, academic tasks, large context reasoning | High reasoning capability, gold-medal performance on tough benchmarks, strong logic & math, long-context handling | Higher compute and token usage; tool-calls may be limited (or temporarily disabled); possibly less cost-efficient |

Why Does DeepSeek (and Its New Models) Matter?

Potential Limitations

No model is perfect while DeepSeek-V3.2 and DeepSeek-V3.2-Speciale make big strides, there are a few caveats to keep in mind:

Practical Use Cases

Here are situations where using these models can give you a real advantage:

How to Get Started?

If you plan to try DeepSeek-V3.2 or Speciale, here are some tips to get the most out of them:

- For everyday tasks: start with V3.2, efficient, balanced, tool-support, lower cost.

- For heavy tasks (math, logic, large context, long reasoning): try V3.2-Speciale.

- Also check DeepSeek Math V3

Final Thoughts

If you care about long-context handling, deep reasoning, coding, large-scale text analysis, or building agent-style tools, yes, DeepSeek-V3.2 (and especially V3.2-Speciale) is a big step forward in open-source AI. It brings power, flexibility, and accessibility, and closes the gap with expensive closed-source models.

If your tasks are simple and light, you might prefer lighter models for speed and cost. Whenever things get heavy, context-rich or logic-intense, DeepSeek gives you an edge.

For many developers, researchers, and advanced users, this release marks a turning point: powerful, flexible, open-source AI that you can control, run, and build upon.

Give it a try, start with small experiments, see how it handles your workflows, then scale up where it works best.